Summary

If you try to run a scheduled Azure WebJob in the free tier it will stop running after about twenty minutes. This is because the free tier lacks the Always On feature offered by the premium (paid for) tiers.

In this article I present a cunning[1] way to get round that problem so your WebJobs will run forever without interruption. You'll also learn how to query a specific URL using PowerShell in an Azure WebJob (there are a couple of issues that might crop up).

As usual, there is (a tiny bit of) code in GitHub.

Background

The other day I needed to start running a couple of simple CRON jobs for a friend of mine. Without going into too much detail here, it transpired that I just needed to make a couple of very specific web requests every five minutes or so.

Whilst there are various companies that offer this kind of service, the one my friend was using kept failing to run so I thought it might be fun to do it myself (as cheaply as possible).

Azure WebJobs to the rescue

I've written a bit about using Azure WebJobs before (if you have a Ghost blog running in Azure, you should read my post about backing up the database with zero downtime). In that article I noted that "In order to guarantee to run your WebJobs successfully, your Web App needs to be Always On."

If you run an Azure Web App in the free tier, it will stop running after about twenty minutes and will restart automatically the next time you visit the site. In other words, the application pool timeout is set quite low and it's not just an idle timeout; this recycling can happen even if you poll the app regularly. This makes sense, especially when you consider that the paid tiers offer an Always On feature which prevents the application pool from stopping due to inactivity.

See the documentation from Microsoft and pay particular attention to this bit:

- Web apps in Free mode can time out after 20 minutes if there are no requests to the scm (deployment) site and the web app's portal is not open in Azure. Requests to the actual site will not reset this.

Once I read that I found myself wondering if there was a way I could make some requests to the scm/kudu/deployment site and thus prevent this timeout from occurring.

Brief Aside: If you're thinking I should be using Azure Functions, you might be right. I will revisit the same task using Azure Functions in a future article.

As luck would have it, when you query the log files of your WebJobs, you do so using Kudu. This requires you to be logged in.

At this point you might be wondering if there's a way you can write a WebJob that can query its own scm/kudu/deployment site. There is.

PowerShell to GET a URL

This is not quite as straightforward as you might think. What works on your PC might not work in the context of an Azure WebJob.

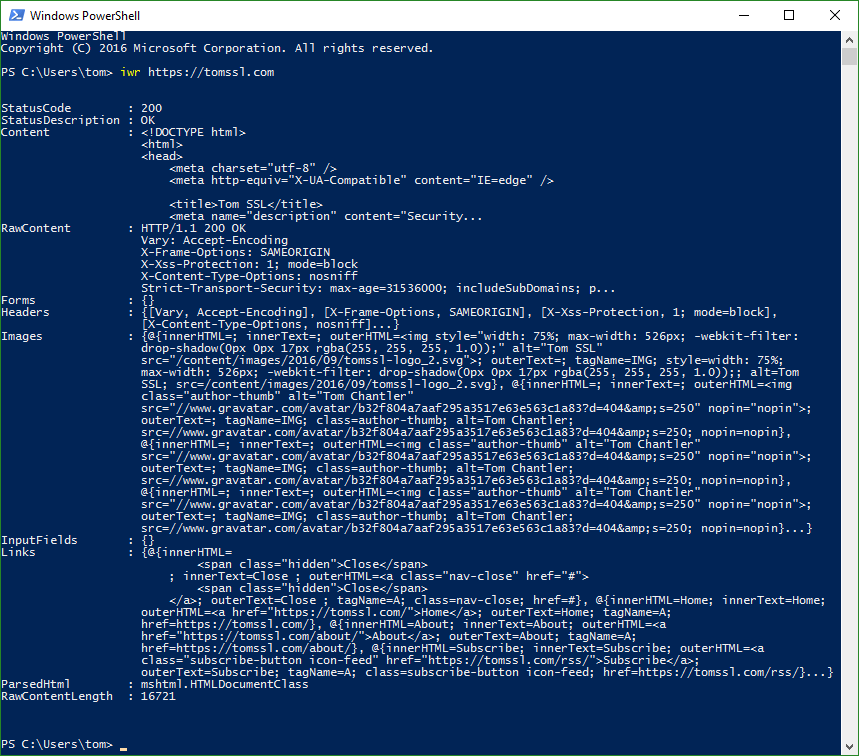

Let's imagine that you just want to issue a GET command to https://tomssl.com.

If you run PowerShell on your local machine and do this (remembering that iwr is shorthand for Invoke-WebRequest):

iwr https://tomssl.com

you'll get something like this:

If you save that as a simple PowerShell file and run it as a WebJob (instructions later on in this article), it will appear to run fine but, when you examine the log file, you'll see it contains an error.

Viewing the log files at https://[websitename].scm.azurewebsites.net/azurejobs/#/jobs reveals something like this:

[12/17/2016 00:21:00 > 444cfd: SYS INFO] Status changed to Initializing

[12/17/2016 00:21:00 > 444cfd: SYS INFO] Run script 'keepalive.ps1' with script host - 'PowerShellScriptHost'

[12/17/2016 00:21:00 > 444cfd: SYS INFO] Status changed to Running

[12/17/2016 00:21:08 > 444cfd: ERR ] iwr : The response content cannot be parsed because the Internet Explorer

[12/17/2016 00:21:08 > 444cfd: ERR ] engine is not available, or Internet Explorer's first-launch configuration is

[12/17/2016 00:21:08 > 444cfd: ERR ] not complete. Specify the UseBasicParsing parameter and try again.

[12/17/2016 00:21:08 > 444cfd: ERR ] At D:\local\Temp\jobs\triggered\keepalive\qjwex2ey.oqu\keepalive.ps1:1

[12/17/2016 00:21:08 > 444cfd: ERR ] char:1

[12/17/2016 00:21:08 > 444cfd: ERR ] + iwr https://[website-name].azurewebsites.net/

[12/17/2016 00:21:08 > 444cfd: ERR ] + ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

[12/17/2016 00:21:08 > 444cfd: ERR ] + CategoryInfo : NotImplemented: (:) [Invoke-WebRequest], NotSupp

[12/17/2016 00:21:08 > 444cfd: ERR ] ortedException

[12/17/2016 00:21:08 > 444cfd: ERR ] + FullyQualifiedErrorId : WebCmdletIEDomNotSupportedException,Microsoft.Po

[12/17/2016 00:21:08 > 444cfd: ERR ] werShell.Commands.InvokeWebRequestCommand

[12/17/2016 00:21:08 > 444cfd: ERR ]

[12/17/2016 00:21:08 > 444cfd: SYS INFO] Status changed to Success

It's trying to use the Internet Explorer engine, which is not available. If we make the suggested fix (-UseBasicParsing which apparently helps with some cookie issues that may crop up) it still won't work as it will try to invoke the UI to show a progress bar. To fix that we need to set progressPreference='silentlyContinue' as per this MSDN article (you need to search in the page to find it). We could also set the output into a variable rather than writing it to the screen (or log file).

If you get an error like:

Invoke-WebRequest : Win32 internal error "The handle is invalid" 0x6 occurred while reading the console output buffer. Contact Microsoft Customer Support Services.then you need to set$progressPreference = "silentlyContinue"in your script before you invoke the web request.

Thus we end up with a (slightly less) simple PowerShell script which looks like this:

$progressPreference = "silentlyContinue";

$result = Invoke-WebRequest -Uri ("https://tomssl.com") -Method Get -UseBasicParsing;

This will run successfully and produce an output like this:

[12/17/2016 00:23:30 > 444cfd: SYS INFO] Status changed to Initializing

[12/17/2016 00:23:30 > 444cfd: SYS INFO] Job directory change detected: Job file 'keepalive.ps1' timestamp differs between source and working directories.

[12/17/2016 00:23:30 > 444cfd: SYS INFO] Run script 'keepalive.ps1' with script host - 'PowerShellScriptHost'

[12/17/2016 00:23:30 > 444cfd: SYS INFO] Status changed to Running

[12/17/2016 00:23:32 > 444cfd: SYS INFO] Status changed to Success

If you delete the WebJob and recreate it with the same name, the first time it runs it might mention the differing timestamps, as above.

PowerShell to access Azure Web App Application Settings

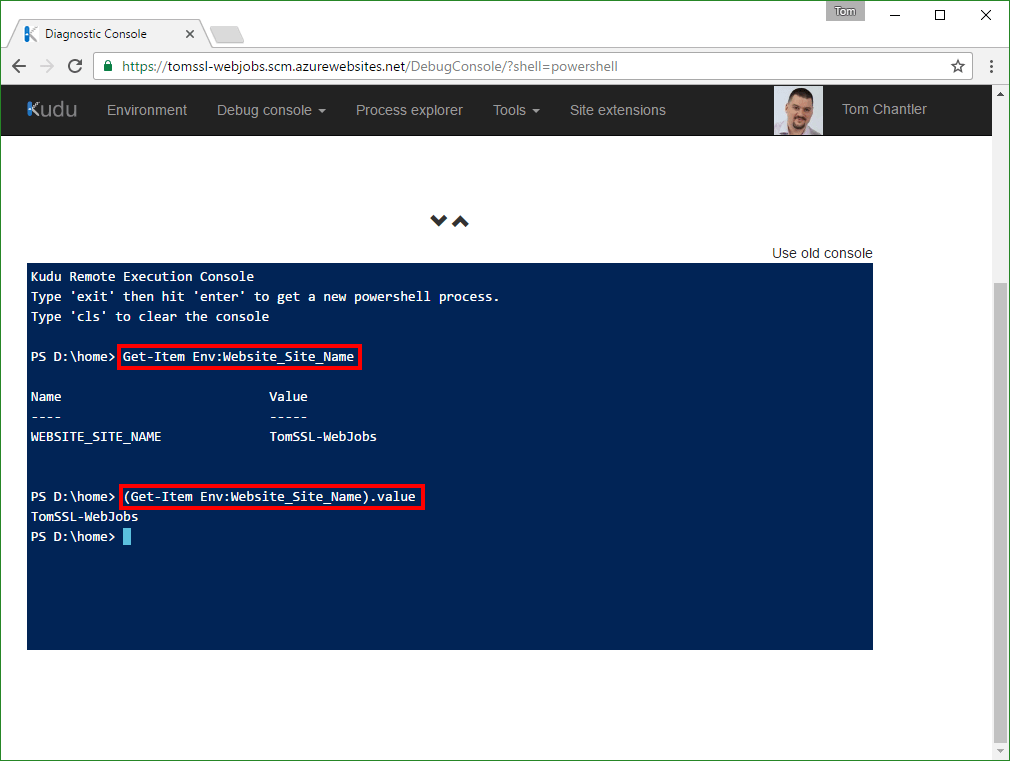

Azure Web Apps contain settings which are analogous to those contained in the <appSettings> element of the web.config file. These are exposed as environment variables which may be accessed via PowerShell from the Kudu console.

You can see these settings by going to https://[website-name].scm.azurewebsites.net/DebugConsole/?shell=powershell and typing Get-Item Env: to list them all or Get-Item Env:SPECIFIC_SETTING to get the specific setting.

The information comes back as a DictionaryEntry (a type of key-value pair), so if you want to assign the value to a specific variable, you can do it like this:

$website = (Get-Item Env:WEBSITE_SITE_NAME).value

PowerShell to access the Kudu console

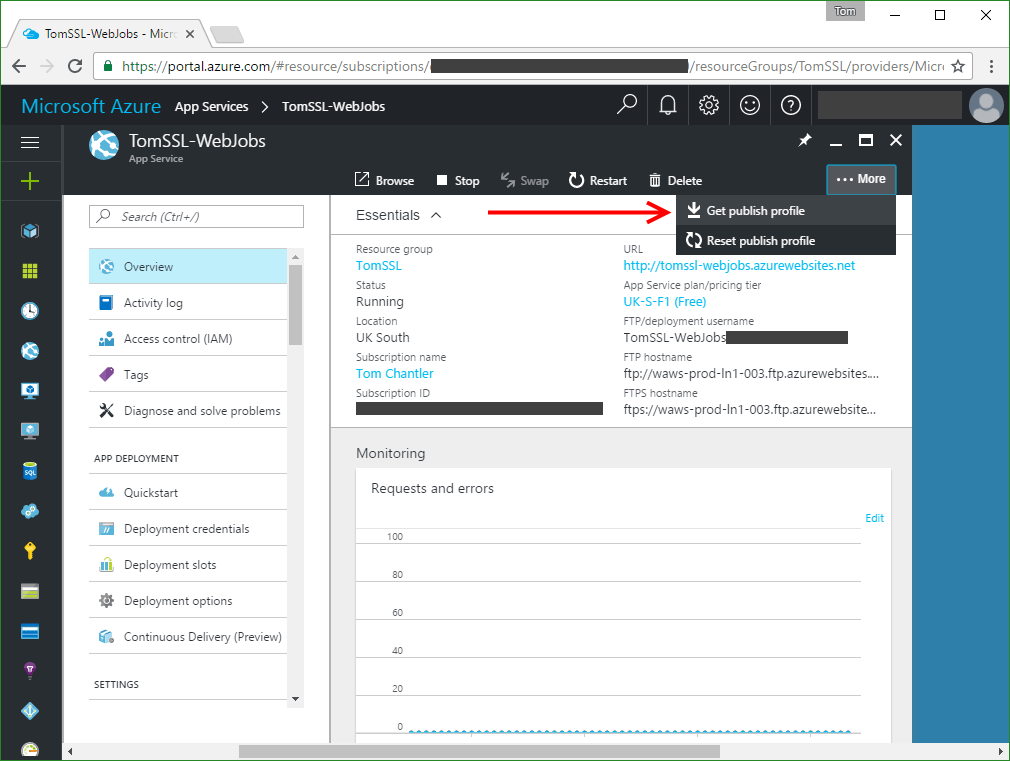

To access the Kudu console, you need valid credentials. The easiest way to get these is to download them from the Azure Portal.

This file contains two set of credentials. One for publishing via MSDeploy and one for publishing via FTP. The passwords are the same, but the usernames differ slightly. Observe that the MSDeploy username is just the azure website name preceded by a dollar sign and it is this one that we are going to use.

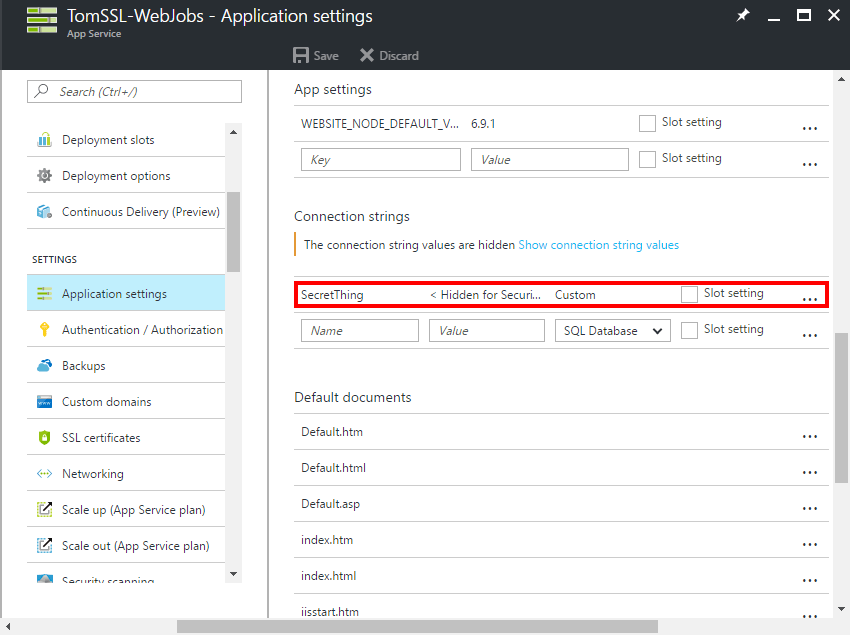

Having seen how to access the application settings using PowerShell, I decided to store the password for my Kudu access as a custom connection string (called SecretThing) in my Azure Web App via the portal. This is quite handy as the value is hidden by default when you view the site in the portal. It's not encrypted, but remember that anybody who has access to the site in the Azure Portal can simply download the publish settings, so it doesn't need to be encrypted.

If you store a custom connection string called SecretThing then its value may be assigned to a variable like this: $password = (Get-Item Env:CUSTOMCONNSTR_SecretThing).value

Once we have our username and password, it's necessary to base64 encode the credentials and send them over HTTPS using Basic authentication.

Finally we will invoke an authenticated web request to the Kudu console, to keep the web process alive. I have elected to hit the URL which returns the available versions of Node.js: https://[website=name].scm.azurewebsites.net/api/diagnostics/runtime

Thus the final PowerShell file is as follows:

$progressPreference = "silentlyContinue"

$website = (Get-Item Env:WEBSITE_SITE_NAME).value

$username = "`$${website}"

$password = (Get-Item Env:CUSTOMCONNSTR_SecretThing).value

$base64AuthInfo = [Convert]::ToBase64String([Text.Encoding]::ASCII.GetBytes(("{0}:{1}" -f $username,$password)))

$url = "https://${website}.scm.azurewebsites.net/api/diagnostics/runtime"

$keepalive = Invoke-RestMethod -Uri $url -Headers @{Authorization=("Basic {0}" -f $base64AuthInfo)} -Method GET

IMPORTANT: Remember to escape the

$from your website username with a backtick`[2]. For my website (tomssl-webjobs.azurewebsites.net) I want the username to end up as$tomssl-webjobs

This keeps itself alive but does not keep the website alive.

In fact, the web app and Kudu are two separate entities. Since web jobs run in Kudu, I can stop my web app and it will still run my web jobs. I have done this for my example as you will see if you go to https://tomssl-webjobs.azurewebsites.net/. You will get an error: Error 403 - This web app is stopped. And yet the WebJobs are still running.

If you want to keep your free Web App alive as well as the WebJobs environment, then simply upload self-web-keepalive.ps1 as a separate WebJob, also running every five minutes. It would be possible to combine these scripts into one, but I think it's more sensible to keep them separate.

To ping another website periodically, use keepalive.ps1 or a modification thereof containing the correct URL.

Scheduling the WebJob

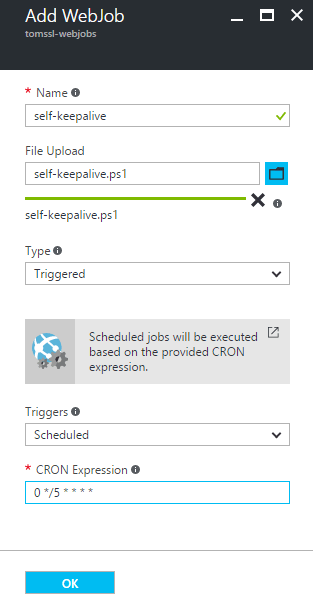

Got to the Azure Portal, select your web app, choose the WebJobs blade and click +Add.

Give the job a suitable name (I called it self-keepalive), upload self-keepalive.ps1, set it to be Triggered and Scheduled and enter 0 */5 * * * * as the CRON Expression (which will run every five minutes).

Remember the syntax for the CRON expression (which is explained in some detail here), specifically the fact that it is configurable to the second and is of the format:

{second} {minute} {hour} {day} {month} {day of the week}

Checklist

- Create a Web App in the free tier to run your web jobs.

- Download the publishing credentials.

- Create a custom connection string called SecretThing containing your publishing password.

- Upload

self-keepalive.ps1as a WebJob and set it to run every five minutes via the CRON trigger0 */5 * * * *. - Add any other WebJobs you want to run (e.g.

self-web-keepalive.ps1if you want to keep your free Web App running continuously).

Conclusion

If you want to run scheduled Azure WebJobs for free (perhaps you're new to Azure and you want to explore it a bit more before committing any money), then follow the steps above to create a special self-keepalive job in addition to the actual jobs you want to run.

This is not really an enterprise fix, but I'm using it and it works. If you are relying on Azure WebJobs running in the free tier to run your business then you're an idiot braver than I am. However, if you're doing this to keep your hobby website running or to do something else that is not mission critical, then that's cool. Since it's not officially supported, it might stop working at some point. If you notice that happen, please let me know.

Stay tuned for a future article which will show you another (officially supported) way to do this, using Azure Functions. Not only that, it still might be free due to the fairly generous free allowance.

If you found this article interesting or useful (or neither), you can comment below, subscribe for free Azure and SQL ebooks (I daresay you've just seen a pop-up of some kind suggesting you might like to do so. Click here to see it again. I promise not to pester you and you might even win something) or follow me on Twitter (I'll probably follow you back). Follow @TomChantler